Our far flung correspondents write:

BATON ROUGE looks like a great site. No pavement. No AC. No shelter. NONE of the issues that plague the sites those denialists are putting a spot light on. This looks like a great site. This looks like a site that meets the CRN guidelines. The great thing about this kind of site is you dont have to make adjustments. It follows standards. Can you please do the following. - Download the giss RAW data for this site.

- Download the Giss adjusted data. Difference these to see what Hansen did to this COMPLIANT site.

- Post the adjustment and explain it.

- Otherwise, back in your hole rabbett.

Ever the pleasing bunny, Eli went to the

GISSTEMP site and downloaded the data for Baton Rouge. Then he plotted the raw and adjusted data on the same graph

and so, as per request he plotted the differences

And then the EverReadyRabett

TM went to the NOAA data center site and read the station history and used the map to see where it had moved. Eli noted that the airport weather station only opened in 1932, and the location moved in 1945, 1978 and finally in 1995. The original airport was pretty much in the center of town (to the east of the river, use the map). In 1945 it moved a considerable distance to the north. Certainly pictures from 2007 don't tell us much about any of this, but the station history helps considerably.

Interestingly, the first record from the airport station(s) is from 1932, but the GISS record goes back to 1889!?

you ask with interest **. Well, little mouse we may not be able to answer that completely, but if you download data from the

Global Historical Climate Network, yes, the information from the Baton Rouge station does extend back to 1888 (Its a

big download out there, don't do this trick on a 26kB modem. The BR station ID is 425722310090) and, Eli notes that there are several stations in the area that opened at the same time, including a number of rural stations.

The data sets incorporated into the GHCN reports are tested and corrected as described in

two publications:

Peterson, T.C., and R.S. Vose, 1997: An overview of the Global Historical Climatology Network temperature database. Bulletin of the American Meteorological Society, 78 (12), 2837-2849. (PDF Version)

Peterson, T.C., R. Vose, R. Schmoyer, and V. Razuvaev, 1998: Global Historical Climatology Network (GHCN) quality control of monthly temperature data. International Journal of Climatology, 18 (11), 1169-1179. (PDF Version)

Frankly Eli cannot resist quoting from the introduction of the second of these which truly shows the difference between amateurs and pros

In the process of creating GHCN, cautionary remarks were made that cast doubt on the quality of climate data. For example, a meteorologist working in a tropical country noticed one station had an unusually low variance. When he had an opportunity to visit that station, the observer proudly showed him his clean, white instrument shelter in a well cared for grass clearing. Unfortunately, the observer was never sent any instruments so every day he would go up to the shelter, guess the temperature, and dutifully write it down. Another story is about a station situated next to a steep hillside. A few of meters uphill from the station was a path which students used walking to and from school. On the way home from school, boys would stop and...well, let’s just say the gauge observations were greater than the actual rainfall. In the late 1800s, a European moving to Africa maintained his home country’s 19th Century siting practice of placing the thermometer under the eaves on the north wall of the house, despite the fact that he was now living south of the equator. Such disheartening anecdotes about individual stations are common and highlight the importance of QC of climate data.

While several commentators are enjoying a good piss on air conditioners and grills, those little boys are at least not doing the same on the stations, or at least not as yet, that we know of.

The point is that one can recover a data set with care and professional experience. Details of how the GHCN data is tested can be found in the two references.

Historically, the identification of outliers has been the primary emphasis of QC work (Grant and Leavenworth, 1972). In putting together GHCN v2 temperature data sets (hereafter simply GHCN) it was determined that there are a wide variety of problems with climate data that are not adequately addressed by outlier analysis. Many of these problems required specialized tests to detect. The tests developed to address QC problems fall into three categories. (i) There are the tests that apply to the entire source data set. These range from evaluation of biases inherent in a given data set to checking for processing errors; (ii) this type of test looks at the station time series as a whole. Mislocated stations are the most common problem detected by this category of test; (iii) the final group of tests examines the validity of individual data points. Outlier detection is, of course, included in this testing. A flow chart of these procedures is provided in Figure 1. It has been found that the entire suite of tests is necessary for comprehensive QC of GHCN.

So the Rabett traipsed over to Broadway and visited GISS, where he read that the various adjustments to the GHCN and USHCN data are

described most recently in

Hansen, J.E., R. Ruedy, Mki. Sato, M. Imhoff, W. Lawrence, D. Easterling, T. Peterson, and T. Karl, 2001: A closer look at United States and global surface temperature change.

J. Geophys. Res.,

106, 23947-23963, doi:10.1029/2001JD000354.

In particular, to the questions of dear reader, Eli discovered that there is an

4.2.2. Urban adjustment. In the prior GISS analysis the time series for temperature change at an urban station was adjusted such that the temperature trends prior to 1950 and after 1950 were the same as the mean trends for all “rural” stations (population less than 10,000) located within 1000 km (with the rural stations weighted inversely with distance). In other words it was a two-legged adjustment with the two legs hinged at 1950 and with the slopes of the two lines chosen to minimize the mean square difference between the adjusted urban record and the mean of its rural neighbors.

The urban adjustment in the current GISS analysis is a similar two-legged adjustment, but the date of the hinge point is no longer fixed at 1950, the maximum distance used for rural neighbors is 500 km provided that sufficient stations are available, and “small-town” (population 10,000 to 50,000) stations are also adjusted. The hinge date is now also chosen to minimize the difference between the adjusted urban record and the mean of its neighbors. In the United States (and nearby Canada and Mexico regions) the rural stations are now those that are “unlit” in satellite data, but in the rest of the world, rural stations are still defined to be places with a population less than 10,000. The added flexibility in the hinge point allows more realistic local adjustments, as the initiation of significant urban growth occurred at different times in different parts of the world.

That sounds a lot like what the differences between the raw and adjusted data shown above and upon which our correspondent inquired, but still, why the 0.1 C steps in the adjustment

GHCN data is reported in 0.1 degree increments.

RTFR.

** Thanks to R. Ruedy at GISS for a prompt and informative response to our inquiry.

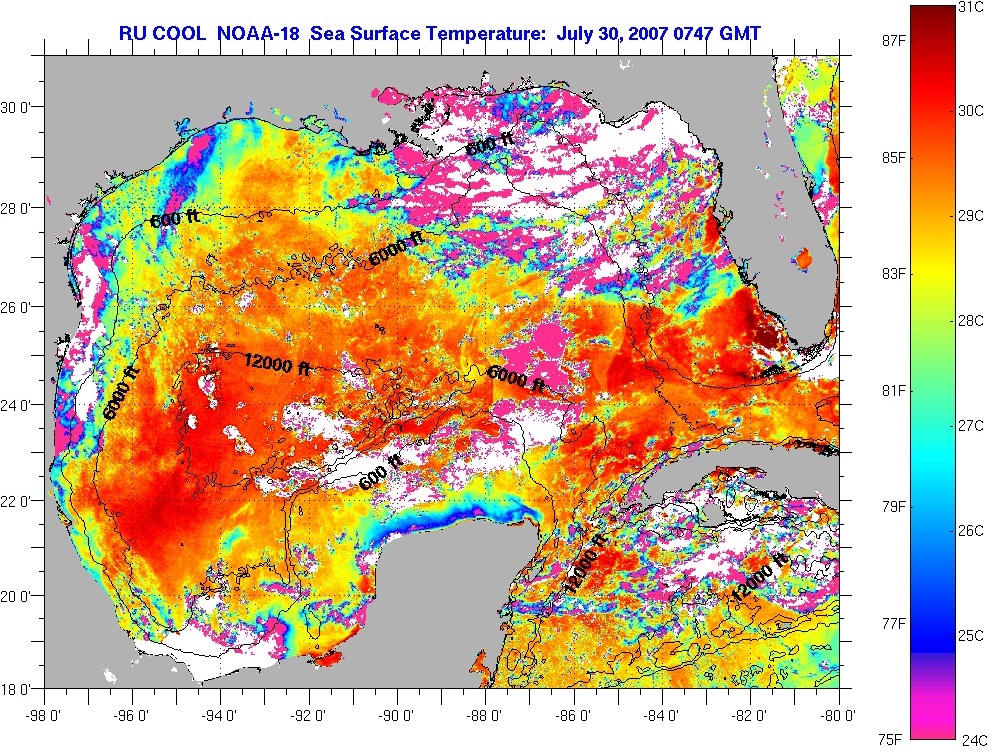

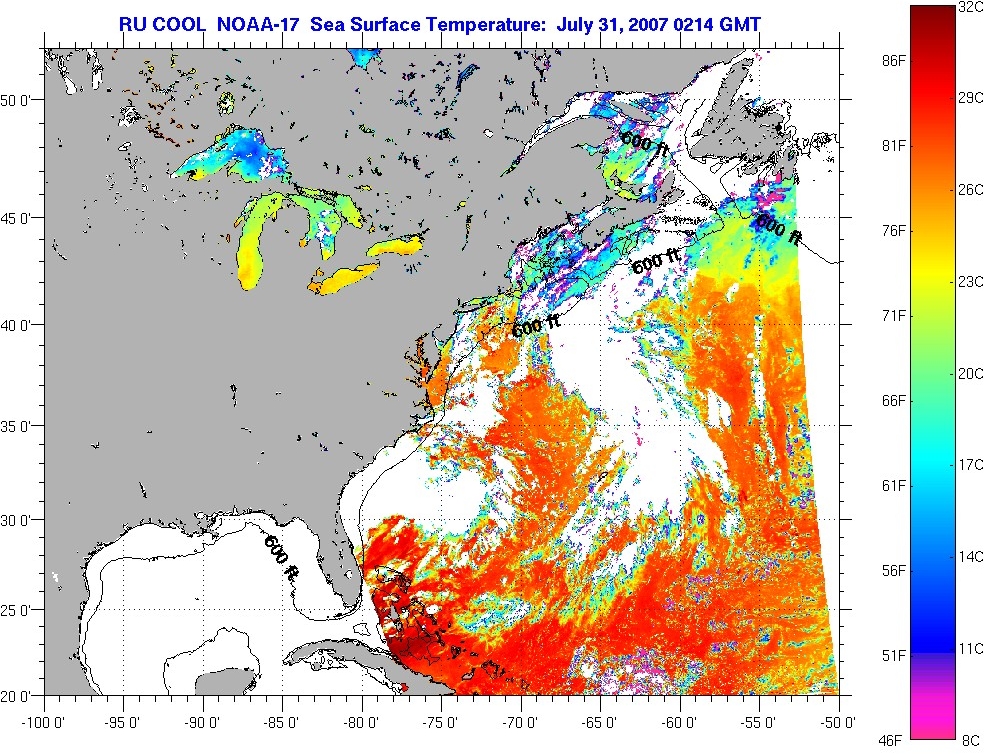

and the Gulf is turning into a hibatchi.

and the Gulf is turning into a hibatchi.